You are working with the text-only light edition of "H.Lohninger: Teach/Me Data Analysis, Springer-Verlag, Berlin-New York-Tokyo, 1999. ISBN 3-540-14743-8". Click here for further information.

You are working with the text-only light edition of "H.Lohninger: Teach/Me Data Analysis, Springer-Verlag, Berlin-New York-Tokyo, 1999. ISBN 3-540-14743-8". Click here for further information.

You are working with the text-only light edition of "H.Lohninger: Teach/Me Data Analysis, Springer-Verlag, Berlin-New York-Tokyo, 1999. ISBN 3-540-14743-8". Click here for further information. You are working with the text-only light edition of "H.Lohninger: Teach/Me Data Analysis, Springer-Verlag, Berlin-New York-Tokyo, 1999. ISBN 3-540-14743-8". Click here for further information.

|

Table of Contents  Multivariate Data Multivariate Data  Basic Knowledge Basic Knowledge  Distance and Similarity Measures Distance and Similarity Measures |

|

| See also: cluster analysis |   |

Distances between objects in multidimensional space form the basis for many multivariate methods of data analysis. Using a different method for calculating the distances may influence the results of a method considerably. Similarity of objects and distances between them are closely related and are often confused. While the term distance is used more precisely in a mathematical sense, the particular meaning of the term similarity often depends on the circumstances and its field of application.

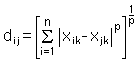

with k being the index of the coordinates, and p determining the type of distance.

There are three special cases of the Minkowski distance:

The various forms of the Minkowski distance do not account for different

metrics of the individual coordinates. If the coordinates span different

ranges, the coordinate with the largest range will dominate the results.

You therefore have to scale the data before calculating the distances.

Furthermore, any correlations between variables (coordinates) will also

distort the distances. In order to overcome these drawbacks, one should

calculate the Mahalanobis distance ![]() which allows for correlation

and different scalings.

which allows for correlation

and different scalings.

The Mahalanobis distance is related to the Euclidean distance, and results in the same values for uncorrelated and standardized data. It can easily be calculated by including the inverse covariance matrix into the distance computations:

![]()

Another distance measure, which is rather a measure for the similarity

between two objects, has been proposed by Jaccard ![]() (it is also

called Tanimoto coefficient):

(it is also

called Tanimoto coefficient):

,

,

with (x.y) being the inner product of the two vectors x, and y. Note

that the Jaccard coefficient equals 1.0 for objects with zero distance.

Last Update: 2006-Jšn-17