You are working with the text-only light edition of "H.Lohninger: Teach/Me Data Analysis, Springer-Verlag, Berlin-New York-Tokyo, 1999. ISBN 3-540-14743-8". Click here for further information.

You are working with the text-only light edition of "H.Lohninger: Teach/Me Data Analysis, Springer-Verlag, Berlin-New York-Tokyo, 1999. ISBN 3-540-14743-8". Click here for further information.

You are working with the text-only light edition of "H.Lohninger: Teach/Me Data Analysis, Springer-Verlag, Berlin-New York-Tokyo, 1999. ISBN 3-540-14743-8". Click here for further information. You are working with the text-only light edition of "H.Lohninger: Teach/Me Data Analysis, Springer-Verlag, Berlin-New York-Tokyo, 1999. ISBN 3-540-14743-8". Click here for further information.

|

Table of Contents  Bivariate Data Bivariate Data  Regression Regression  Derivation of a Univariate Regression Formula Derivation of a Univariate Regression Formula |

|

| See also: regression, Curvilinear Regression, Regression - Confidence Interval |   |

Let us conduct this procedure for a particular example:

This formula is to be estimated from a series of data points [xi,yi], where the xi are the independent values, and the yi are to be estimated. By substituting the yi values with their estimates axi+bxi2 we obtain the following series of data points: [xi, axi+bxi2]. The actual values of the y values are, however, the yi. Thus the sum of squared errors S for n data points is defined by

S = (ax1+bx12-y1)2 + (ax2+bx22-y2)2 + (ax3+bx32-y3)2 + ...... + (axn+bxn2-yn)2

Now we have to calculate the partial derivatives with respect to the parameters a and b, and equate them to zero:

dS/da = 0 = 2(ax1+bx12-y1)x1

+

2(ax2+bx22-y2)x2

+ 2(ax3+bx32-y3)x3

+ ...... + 2(axn+bxn2-yn)xn

dS/db = 0 = 2(ax1+bx12-y1)x12

+

2(ax2+bx22-y2)x22

+ 2(ax3+bx32-y3)x32

+ ...... + 2(axn+bxn2-yn)xn2

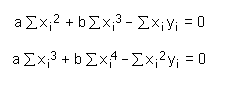

These two equations can easily be reduced by introducing the sums of the individual terms:

Now, solve these equations for the coefficients a and b:

And then substitute the expressions for a and b into their counterparts, with the following final results:

Last Update: 2006-Jšn-17